Will GPUs Replace CPUs? Understanding Core Differences

GPU vs CPU is a parallelization vs complexity dilemma. While GPUs can manage very large parallel calculations, they struggle with linear, more heterogeneous tasks, where CPUs excel.

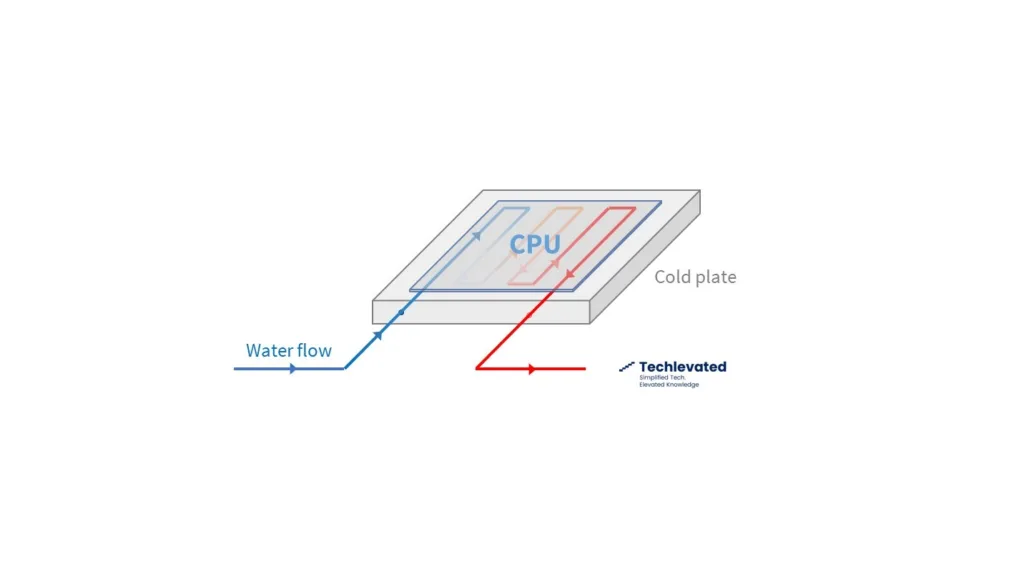

Direct-to-chip cooling system

Direct-to-chip cooling (aka liquid cooling or cold plate cooling) is becoming the go-to solution to refrigerate high-powered AI data centers. These data centers, which handle AI tasks like training and inferencing large language models (LLMs), have way more power packed into their server racks compared to standard ones. While traditional server racks have power densities of 5 to 10kW, AI server racks can reach 10x more, between 60 to 100 KW. Liquid cooling systems are needed as traditional air cooling is simply not enough to cool down these supercharged systems.

This exponential increase in server rack power density is explained by the utilization of graphics processing units (GPUs), which excel at parallel processing tasks. GPUs, alongside CPUs and high-bandwidth-memory (HBM), are used to crunch vast amounts of data and complex algorithms. As a consequence of this heightened power density, traditional air cooling mechanisms like raised floors or computer room air handlers (CRAHs) are not enough to cool down this high-performance-computing racks. Failure to properly cool down a server rack can be fatal, result in overheating, increased energy consumption and performance degradation.

In response to this challenge, the industry is switching to liquid cooling solutions, with direct-to-chip liquid cooling emerging as the frontrunner. In direct-to-chip cooling, water flows through a conductive plate where the electronic components are placed, dissipating heat at a much faster pace than air cooling systems. The cold plate enters in direct contact with heat generating components like processors and memory, dissipating a much higher amount of heat and ensuring sustained performance.

However, liquid cooling does not come free of challenges. First, it entails a much higher degree of complexity compared to traditional air cooling systems. Think of heat exchangers, pumps, reservoirs, and plumbing. It also needs an effective coolant distribution network through the data center, where flow rates and pressure levels have to be considered. In the case of a data center retrofit, liquid cooling systems also need to be integrated with the already existing air cooling systems.

Secondly, direct-to-chip cooling requires much higher capital investment and operational costs, while also being more complex. The installation process is more lengthy and expensive, while also resulting in higher maintenance: think of monitoring for humidity, leaks or corrosion, and servicing pumps or filtration systems.

Normally, direct-to-chip cooling is better intended for hyperscale data centers, given these facilities have the economies of scale and expertise to justify a higher upfront investment and properly maintain the liquid cooling systems. Industry leaders like Meta, Google or Equinix are already installing liquid cooling systems in their data centers.

John Niemann, Vertiv’s Senior Vice President, mentioned in a recent earnings call “direct-to-chip cooling is where we are seeing scale being deployed”, implying this will be the default solution for cooling AI data centers. Vertiv, which manufactures equipment and cooling systems for data centers, has seen its sales increase substantially in 2023 thanks to strong demand for cooling systems for AI data centers.

GPU vs CPU is a parallelization vs complexity dilemma. While GPUs can manage very large parallel calculations, they struggle with linear, more heterogeneous tasks, where CPUs excel.

AI workloads require more server density and generate more heat. Direct-to-chip liquid cooling is emerging as the preferred cooling solution for AI with leaders like Meta, Google or Equinix adopting it.

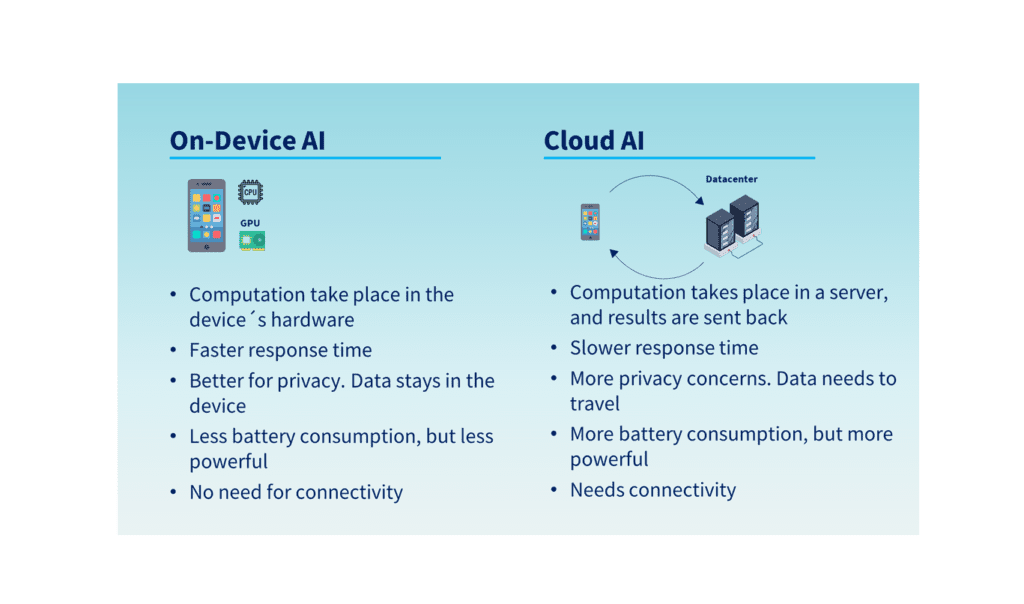

Smartphone on-device AI is expected by 2024, and will likely be combined with cloud AI. Smartphone makers will capitalize on it by increasing prices.

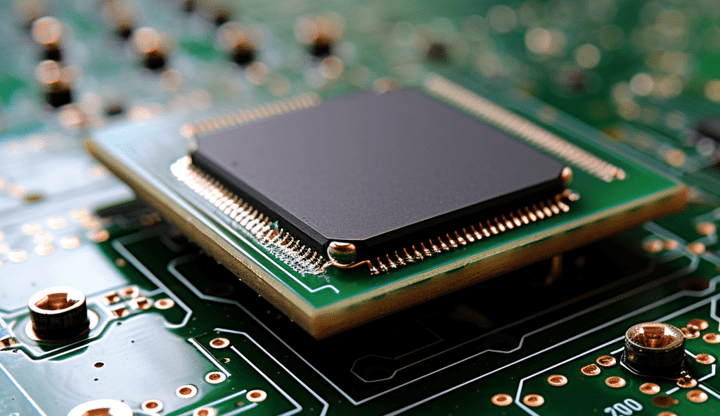

Logic chips are the “brains” of the electronic device. They process information and perform calculations. Memory chips store information and data.