Game On? AI’s Journey Ahead in Video Games

Generative AI for video games is still far from being real. The main challenges are latency of cloud gaming and the very high cost of

With the arrival of AI and the impressive computational power of GPUs, there´s been some talk about whether the CPUs used in PCs and data centers could be replaced by GPUs. At the Computex Technology Show in Taiwan, Nvidia’s CEO, Jensen Huang compared the performance of GPU vs CPU servers for training AI, showing that GPUs outperform CPUs in AI training and inferencing in every possible metric.

Nobody is going to argue with Jensen’s statement, however, we must remember that GPUs and CPUs are designed to do different things, with fundamental design and architectural differences between them. In our view, GPUs will not replace CPUs (neither in PCs or the data center) as each hardware has a different purpose. It is a parallelization vs complexity dilemma. While GPUs can manage very large parallel calculations, they can struggle with linear, more heterogeneous tasks, where CPUs excel.

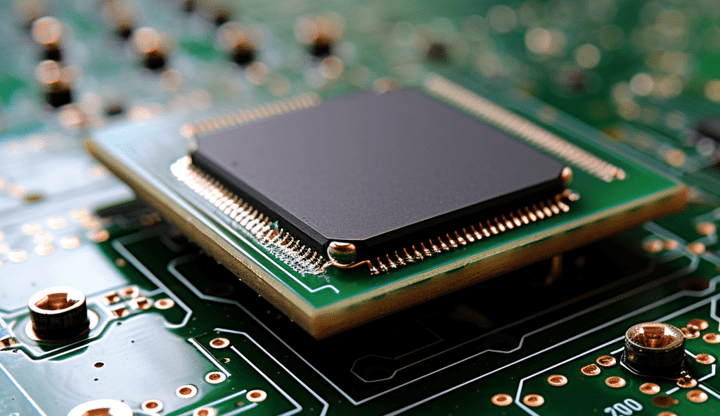

GPUs are designed to perform massive parallel but rather simple operations. They have strong raw processing power, making them suitable for repetitive, computationally intensive operations like AI training and inferencing, which require a lot of computing power due to the billions of parameters involved.

However, GPUs might struggle with simpler operations like managing storage devices, gamepads or keyboards. One reason is that GPUs have different instruction sets than CPUs, known as shader languages. Shader languages are optimized for arithmetic and logic operations but are not well intended for general-purpose computing. CUDA, developed by Nvidia, is probably the most famous shader language out there.

The CPU is the brain of any computing system, managing the overall execution of programs and coordinating tasks between hardware components. It is designed for general-purpose-computing and for sequential, more complex tasks that are not easily parallelizable, hence not well suited for a GPU. CPUs excel at handling i/o (input/output) operations such as opening, reading and writing files and accessing different memory levels. Think about the execution of a code in Python. Code must be run linearly, as a specific line of code might depend on conditional statements that have been stated above. CPU is the appropriate hardware to run code.

Latency and memory hierarchy is another important consideration. CPUs normally have a more sophisticated memory hierarchy, being faster in accessing different levels of memory vs GPUs. CPUs have larger caches that are placed physically close to the CPU cores, reducing the time and energy consumption required to access the data. While a GPU itself may be very fast, the process of copying data to and from the GPU to the main memory (RAM) through the PCI-e bus might be long, introducing a performance bottleneck.

Using an easy analogy, you can think of the CPU as the orchestra conductor, organizing all the musicians. The GPU is the master violinist, doing one task extremely well and fast, but always guided by the orchestra conductor.

Generative AI for video games is still far from being real. The main challenges are latency of cloud gaming and the very high cost of

RISC-V, an open standard instruction set architecture (ISA), could have the potential to mirror the success of Linux two decades later, but in the hardware domain.

GPU vs CPU is a parallelization vs complexity dilemma. While GPUs can manage very large parallel calculations, they struggle with linear, more heterogeneous tasks, where CPUs excel.

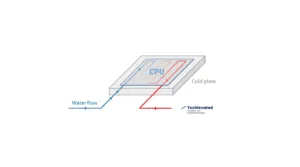

AI workloads require more server density and generate more heat. Direct-to-chip liquid cooling is emerging as the preferred cooling solution for AI with leaders like Meta, Google or Equinix adopting it.

Smartphone on-device AI is expected by 2024, and will likely be combined with cloud AI. Smartphone makers will capitalize on it by increasing prices.

Logic chips are the “brains” of the electronic device. They process information and perform calculations. Memory chips store information and data.

The information provided on this site is for informational purposes only. The content is based on the authors´ knowledge and research at the time of writing. It is not intended as professional advice or a substitute for professional consultation. Readers are encouraged to conduct their own research and consult with appropriate experts before making any decisions based on the information provided. The blog may also include opinions that do not necessarily reflect the views of the blog owners or affiliated individuals. The blog owners and authors are not responsible for any errors or omissions in the content or for any actions taken in reliance on the information provided. Additionally, as technology is rapidly evolving, the information presented may become outdated, and the blog owners and authors make no commitment to update the content accordingly.