Will GPUs Replace CPUs? Understanding Core Differences

GPU vs CPU is a parallelization vs complexity dilemma. While GPUs can manage very large parallel calculations, they struggle with linear, more heterogeneous tasks, where CPUs excel.

Ever wondered why your iPhone lags behind compared to ChatGPT or Google’s Gemini? That´s because those AI models reside in the cloud, where there is ample computing power, whereas your smartphone’s own computing power is limited to its hardware. When using ChatGPT in your smartphone, it communicates with a data center through Wifi or 5G, sends a query and waits back for a response.

In 2024 edge AI should arrive to smartphones and other edge devices, and hardware should be able to directly perform some AI calculations. Smartphone makers like Apple and Samsung are developing more powerful system-on-chips (SoC) every year to power edge AI. Apple´s A17 chip used in the iPhone 15 can perform 35 trillion operations per second vs. 17 trillion for its predecesor, the A16. And Apple is throwing $1 billion anually into the AI race.

AI-capable smartphones will likely combine on-device AI with Cloud AI. Samsung has already announced that its Galaxy AI, Gauss, will be a combination of both approaches. Simpler computing tasks like voice commands, offline language translation or personal assistant tasks will be done on the device itself. Cloud AI, through LLM providers like ChatGPT or Gemini, will step in for heavier tasks like live language translation or traffic prediction.

Cloud AI may run on older phone versions because, as far as you have the app, the computing complexity resides in a data center. But you will have to buy a new generation smartphone to access edge, on-device AI functionalities, given previous smartphone versions lack the necessary hardware.

In any case, be ready to open your wallet. The billions put into R&D need a return and consumers will likely pay the bill.

Edge AI promises a big step up in power and productivity, but don’t be surprised if your wallet feels a bit lighter on the way. The price of progress, my friend, is often counted in dollars and cents.

GPU vs CPU is a parallelization vs complexity dilemma. While GPUs can manage very large parallel calculations, they struggle with linear, more heterogeneous tasks, where CPUs excel.

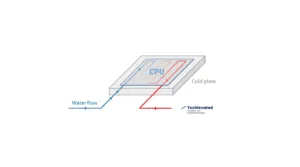

AI workloads require more server density and generate more heat. Direct-to-chip liquid cooling is emerging as the preferred cooling solution for AI with leaders like Meta, Google or Equinix adopting it.

Smartphone on-device AI is expected by 2024, and will likely be combined with cloud AI. Smartphone makers will capitalize on it by increasing prices.

Logic chips are the “brains” of the electronic device. They process information and perform calculations. Memory chips store information and data.

The information provided on this site is for informational purposes only. The content is based on the authors´ knowledge and research at the time of writing. It is not intended as professional advice or a substitute for professional consultation. Readers are encouraged to conduct their own research and consult with appropriate experts before making any decisions based on the information provided. The blog may also include opinions that do not necessarily reflect the views of the blog owners or affiliated individuals. The blog owners and authors are not responsible for any errors or omissions in the content or for any actions taken in reliance on the information provided. Additionally, as technology is rapidly evolving, the information presented may become outdated, and the blog owners and authors make no commitment to update the content accordingly.