Game On? AI’s Journey Ahead in Video Games

Generative AI for video games is still far from being real. The main challenges are latency of cloud gaming and the very high cost of

The term AI has become a common buzzword, but when it comes to video games it´s crucial to distinguish between the “AI” used in games and the AI we encounter in every day discussions.

ChatGPT or Midjourney are generative AI models, meaning they have the ability to generate new content such as text, image or video. They are trained on large datasets and learn to generate content similar to the examples they were provided.

Video game AI, unlike self-learning intelligence, relies on techniques like nested decision trees and pathfinding. Decision trees simulate non-player characters (NPC) decision-making with nodes representing decision points and branches indicating possible choices. In pathfinding an algorithm determines the optimal or most efficient path for a character to move from one point to another. While these are machine learning algorithms, they aren’t like generative AI, as they react to the player’s actions based on a set of known parameters.

Many users now wonder if NPC systems could evolve into self learning entities, dynamically adapting to player actions and creating endless alternatives scenarios and pathways. While some speculate about the integration of such technology into video games, we do not think it will become a reality any time soon.

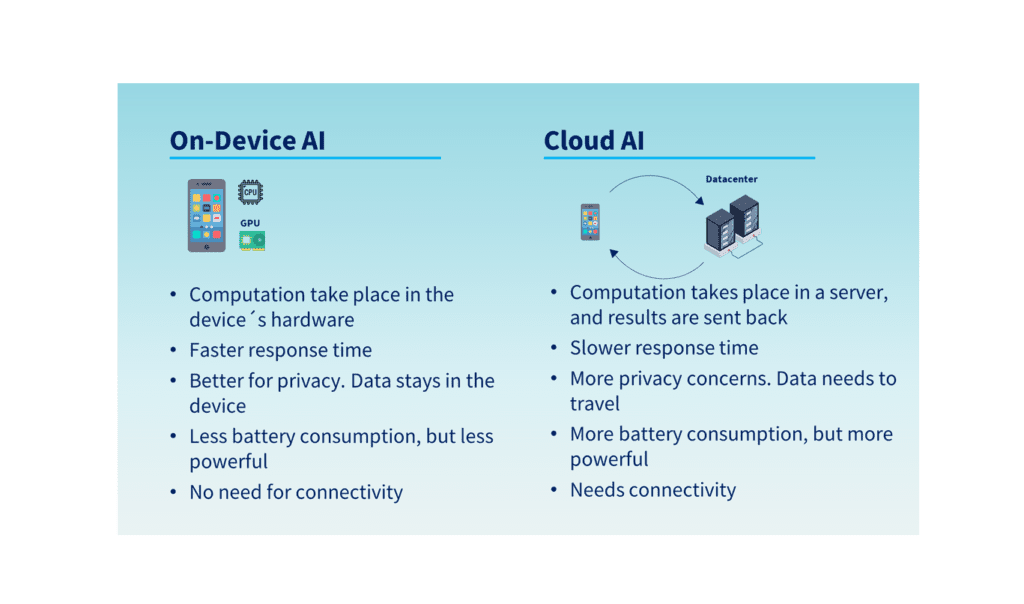

The first drawback is hardware and cost. Large language models (LLMs) like ChatGPT operate on the cloud, meaning there is time lag when interacting with them. A lag of two seconds might not matter when receiving a written answer from ChatGPT, but in a video game two seconds can have a big impact in the gameplay experience. Cloud gaming platforms like Xbox Cloud Gaming already face challenges with lag, so adding generative AI on top could exacerbate latency issues, rendering some games unusable.

The alternative of on-device AI, where your computer or console handles the calculations, faces challenges too. The size and cost of chiplets required for advanced AI make it technically and economically impossible to incorporate it into today’s video games. For instance, a single Nvidia H100 GPU used in AI training costs around $10,000, that is 20x more than the price of Playstation 5. It also weighs up to 130k.5g (287.6lbs). Intel has been working in incorporating some AI functionalities into its laptops with Intel’s Ultra Processor Cores, which include a CPU, GPU and NPU altogether. This core can improve tasks like video editing, image resolution and accelerate some calculations, but it is very far from anything close to self-learning video game AI.

A second consideration is: even if generative AI for video games would become feasible, do we really want it? What if the AI is much better than us? Humans want different levels of difficulty, but they also want to beat their opponent. A too intelligent rival would make games less enjoyable, resulting in lower customer satisfaction and lower sales for video game developers. Video games must also follow a logical plot. If AI starts developing its own behaviors it would open thousands of different paths, not always coherent. The NPC actions could become irrational at some point.

Take-Two Interactive’s CEO, Strauss H. Zelnick, made it clear in a conference call with analysts that although AI it’s a very useful tool for video game developers and it will definitely increase productivity, the human brain will always be required to make good video games.

“Our view is that AI will allow us to do a better job and to do a more efficient job when you are talking about tools, and they are simply better and more effective tools. I wish I could say that the advances in AI will make it easier to create hits. Obviously, it won’t. Hits are created by genius and datasets, plus compute, plus large language models does not equal genius. Genius is the domain of human beings, and I believe it will stay that way. However, I think jobs can be made a whole lot easier and more efficient by developments in AI, and we are certainly looking forward to that. And as I said, we are already putting it in practice every day”

Generative AI for video games is still far from being real. The main challenges are latency of cloud gaming and the very high cost of

RISC-V, an open standard instruction set architecture (ISA), could have the potential to mirror the success of Linux two decades later, but in the hardware domain.

GPU vs CPU is a parallelization vs complexity dilemma. While GPUs can manage very large parallel calculations, they struggle with linear, more heterogeneous tasks, where CPUs excel.

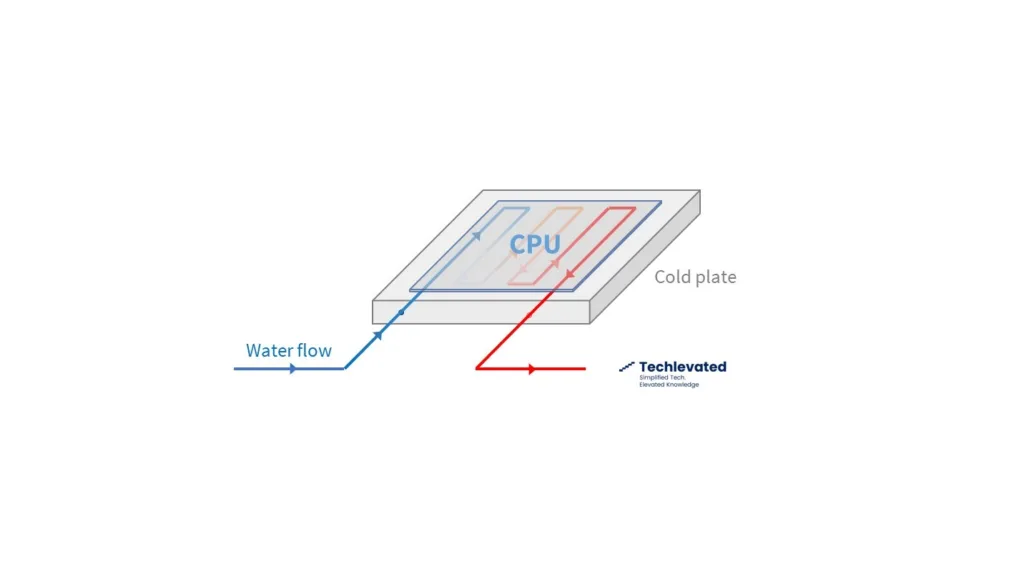

AI workloads require more server density and generate more heat. Direct-to-chip liquid cooling is emerging as the preferred cooling solution for AI with leaders like Meta, Google or Equinix adopting it.

Smartphone on-device AI is expected by 2024, and will likely be combined with cloud AI. Smartphone makers will capitalize on it by increasing prices.

Logic chips are the “brains” of the electronic device. They process information and perform calculations. Memory chips store information and data.

The information provided on this site is for informational purposes only. The content is based on the authors´ knowledge and research at the time of writing. It is not intended as professional advice or a substitute for professional consultation. Readers are encouraged to conduct their own research and consult with appropriate experts before making any decisions based on the information provided. The blog may also include opinions that do not necessarily reflect the views of the blog owners or affiliated individuals. The blog owners and authors are not responsible for any errors or omissions in the content or for any actions taken in reliance on the information provided. Additionally, as technology is rapidly evolving, the information presented may become outdated, and the blog owners and authors make no commitment to update the content accordingly.