Fluxless TCB vs TCB

As interconnection pitches shrink below 10µm for advanced logic and memory applications, fluxless TCB solves the issues that standard TCB encounters with the flux.

Secure Multi Party Computation (SMPC) is a technique used in artificial intelligence and machine learning to enable multiple parties to collaboratively analyze and compute on their combined data without revealing their individual inputs.

In simpler terms, imagine you have a group of friends and you want to play a game together, but you don’t want anyone to know your individual answers or scores. SMPC allows you to do just that. It ensures that each person’s data remains private and secure while still being able to work together and make decisions collectively.

In AI and machine learning, SMPC is used when multiple organizations or entities want to combine their data to build a more accurate and powerful model without sharing sensitive information. Each party contributes their data, but the computations are performed in a way that no party can see or access the individual data inputs. This allows for collaboration and learning from diverse datasets while preserving privacy and confidentiality.

SMPC uses cryptographic techniques and algorithms to enable secure computations. It ensures that even though the data and computations are distributed across different parties, the final result is accurate and reliable, while preventing any party from gaining access to others’ private data.

In summary, SMPC allows several parties to work together on analyzing their combined data while keeping individual data private and secure. It is a powerful technique that enables collaborative learning and decision-making without compromising privacy.

One significant case study involving Secure Multi-Party Computation (SMPC) is the Millionaire’s Problem. This problem serves as a foundational example in the field of secure computation and illustrates how multiple parties can perform calculations on sensitive data without revealing that data to each other.

Background: The Millionaire’s Problem was formulated by Andrew Yao in 1982 as a theoretical problem to explore the concept of secure multi-party computation. The problem is simple in concept but highlights the potential applications of SMPC in preserving privacy while performing computations.

Problem Statement: Two millionaires want to compare their wealth without disclosing the exact amount of their wealth to each other. They only want to know who is richer without revealing their specific assets.

Solution using Secure Multi-Party Computation: Secure Multi-Party Computation allows the millionaires to compare their wealth without revealing the actual figures. Here’s a simplified description of how this can be achieved:

Significance: The Millionaire’s Problem is significant because it demonstrates the power of SMPC in preserving privacy while allowing parties to compute over sensitive data. It paved the way for the development of cryptographic techniques and protocols that enable secure computations in various applications, such as financial transactions, medical data analysis, and more.

Secure Multi-Party Computation has found practical applications in several domains:

In the 1970s, the concept of secure multi party computation was introduced by Andrew Yao in his seminal paper called “Protocols for Secure Computations.” Yao’s work laid the foundation for theoretical research in secure computation but did not provide practical protocols.

In the 1980s, a significant breakthrough came with the introduction of the concept of secure function evaluation (SFE). Researchers like Oded Goldreich, Silvio Micali, and Shafi Goldwasser made significant contributions in this field, addressing fundamental issues related to security definitions and computational efficiency.

In the 1990s, the focus of SMPC research shifted towards developing more efficient protocols and exploring real world applications. Researchers such as Yehuda Lindell and Benny Pinkas introduced efficient techniques like garbled circuits, which allow for secure computation without revealing intermediate computations.

In the 2000s, advancements in cryptography, particularly homomorphic encryption and zero knowledge proofs, further enhanced SMPC techniques. These developments made it feasible to perform complex computations on encrypted data, thus expanding the scope and practicality of SMPC.

Since the 2010s, there has been a growing interest in applying SMPC to machine learning and artificial intelligence. This integration enables collaborative training of models on data from multiple parties while preserving privacy. Numerous research studies and frameworks, such as the SecureML and Sharemind platforms, have emerged to address the challenges of secure machine learning using SMPC.

In recent years, there has been an increasing recognition of the importance of privacy in data driven applications. This has led to further advancements in SMPC techniques, including improved efficiency, scalability, and support for different types of computations.

As interconnection pitches shrink below 10µm for advanced logic and memory applications, fluxless TCB solves the issues that standard TCB encounters with the flux.

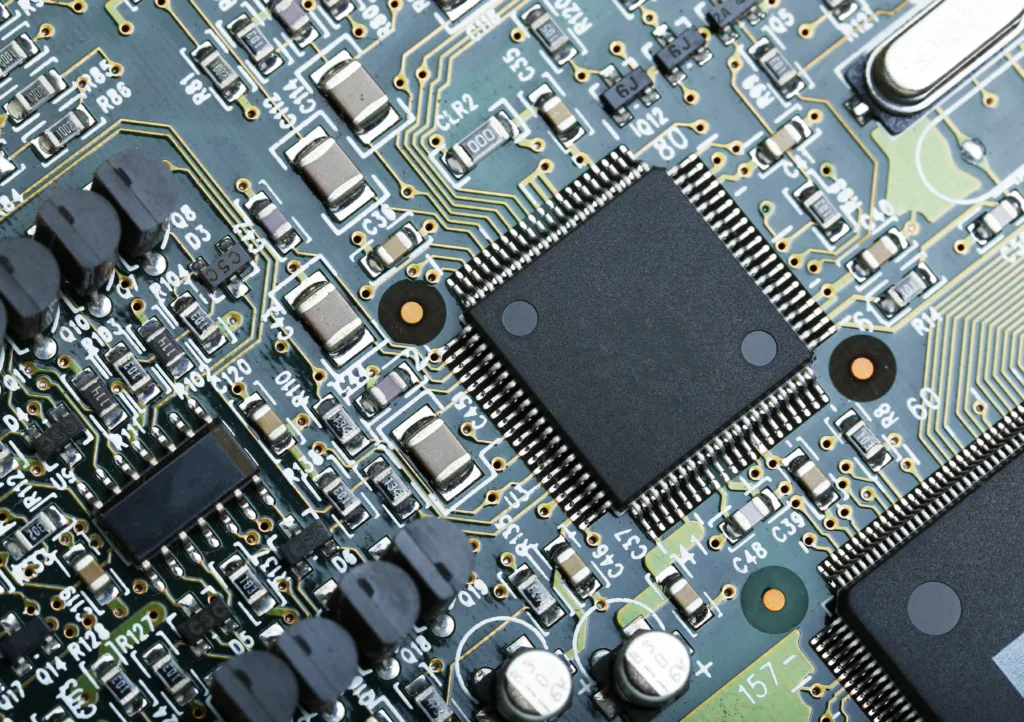

The metal pitch refers to the distance between the centers of two adjacent metal interconnect lines on an integrated circuit (IC). Since transistors evolved into 3D strucrures, this measurement has lost significance.

The front-end and back-end are highly interdependent. A constant feedback loop between front and back-end engineers is necessary to improve manufacturing yields.

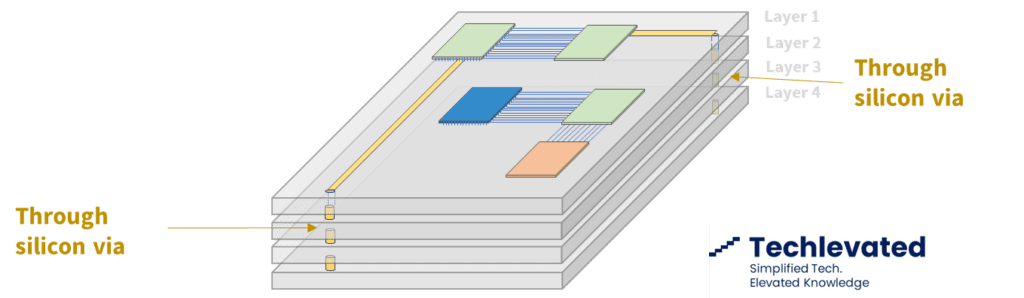

Built directly into the silicon, through silicon vias (TSV) facilitate 3D IC integration and allow for more compact packaging. They have become the default solution to interconnect different chip layers or to stack chips vertically.

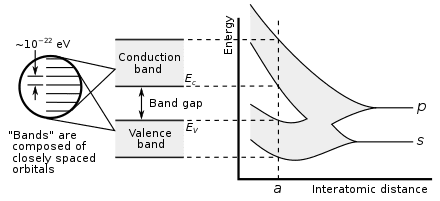

Silicon carbide (SiC) is used in electric vehicles due to its wide bandgap and great thermal conductivity. Gallium nitride (GaN) shares many characteristics with SiC while also minimizing RF noise.

GPU vs CPU is a parallelization vs complexity dilemma. While GPUs can manage very large parallel calculations, they struggle with linear, more heterogeneous tasks, where CPUs excel.

The information provided on this site is for informational purposes only. The content is based on the authors´ knowledge and research at the time of writing. It is not intended as professional advice or a substitute for professional consultation. Readers are encouraged to conduct their own research and consult with appropriate experts before making any decisions based on the information provided. The blog may also include opinions that do not necessarily reflect the views of the blog owners or affiliated individuals. The blog owners and authors are not responsible for any errors or omissions in the content or for any actions taken in reliance on the information provided. Additionally, as technology is rapidly evolving, the information presented may become outdated, and the blog owners and authors make no commitment to update the content accordingly.